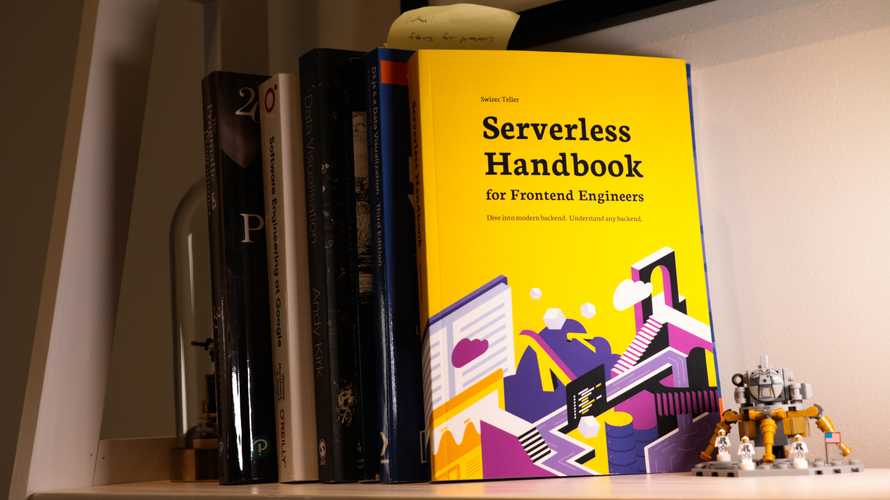

Getting Started with Serverless

Hello friend ❤️

I'm happy you're giving serverless a try. It's one of the most exciting shifts in web development since React introduced us to components.

Creating your first serverless application can be intimidating. Type "serverless" into Google and you're hit with millions of results all assuming you know what you're doing.

There's Serverless, the open source framework, then there's AWS Serverless, and a "serverless computing" Wikipedia article, your friends mention lambda functions, then there's cloud functions from Netlify and Vercel ... and aren't Heroku, Microsoft Azure, and DigitalOcean droplets a type of serverless? "CloudFlare edge workers!" someone shouts in the background.

It's all one big mess.

That's why I created the Serverless Handbook. The resource I wish I had :)

Let's start with a short history lesson to get a better understanding of what serverless is and what it isn't. Then you'll build your first serverless backend – an app that says Hello 👋

Don't want the intro? Jump straight to your first app

What is serverless

Serverless is other people's servers running your code.

The logical next step to platform as a service, which came from The Cloud, which came from virtual private servers, which came from colocation, which came from a computer on your desk running a web server. 🤯

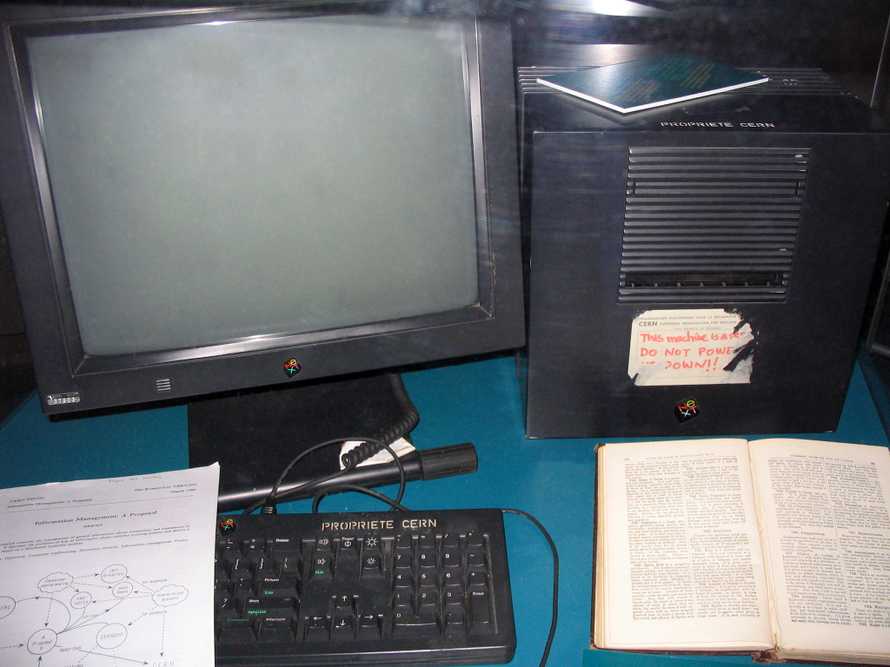

First we all had servers.

You installed Linux on a computer, hooked it up to the internet, begged your internet provider for a static IP address, and let it run 24/7. Mine lived in the bedroom and I'll never forget that IP. Good ol' 193.77.212.100.

With a static IP address, you can tell DNS servers how to find your server with a domain. People can type that domain into a URL and find your server.

But a domain doesn't give you a website or a webapp.

For that, you need to configure Apache or Nginx, set up a reverse proxy to talk to your application, run your application, ensure that it's running and ... it gets out of hand fast. Just to put up a simple website.

Then came colocation

Colocation was a solution for the bedroom problem. What happens if your house catches fire? What if power goes out? Or Mom trips on the power cable and unplugs your computer?

Residential hosting is not reliable.

Your internet is lower tier than a business would get. Less reliable and if the provider needs to do maintenance, they think nothing of shutting off your pipes during non-peak hours. Your server needs strong internet 24/7.

When you go on vacation, nobody's there to care for your server. Site might go down for a week before you notice. 😱

Colocation lets you take that same server and put it in a data center. They supply the rack space, stable power, good internet, and physical security.

You're left to deal with configuration, maintenance, and replacing hard drives when they fail.

PS: Computers break all the time. A large data center replaces a hard drive every few minutes just because a typical drive lasts 4 years and when you have thousands, the stats are not in your favor.

It's on you to keep everything running.