Lambda pipelines for serverless data processing

You get tens of thousands of events per hour. How do you process that?

You've got a ton of data. What do you do?

Users send hundreds of messages per minute. Now what?

You could learn Elixir and Erlang – purpose built languages for message processing used in networking. But is that where you want your career to go?

You could try Kafka or Hadoop. Tools designed for big data, used by large organizations for mind-boggling amounts of data. Are you ready for that?

Elixir, Erlang, Kafka, Hadoop are wonderful tools, if you know how to use them. But there's a significant learning curve and devops work to keep them running.

You have to maintain servers, write code in obscure languages, and deal with problems we're trying to avoid.

Serverless data processing

Instead, you can leverage existing skills to build a data processing pipeline.

I've used this approach to process millions of daily events with barely a 0.0007% loss of data. A rate of 7 events lost per 1,000,000.1

We used it to gather business and engineering analytics. A distributed console.log that writes to a central database. That's how I know you should never build a distributed logging system unless it's your core business 😉

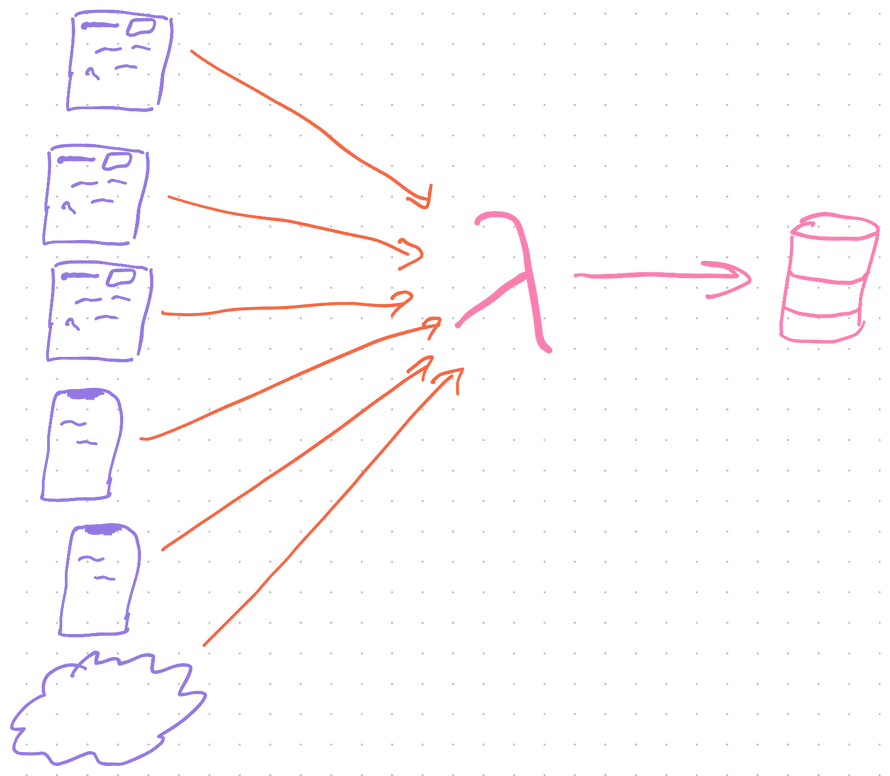

The system accepts batches of events, adds info about user and server state, then saves each event for easy retrieval.

It was so convenient, we even used it for tracing and debugging in production. Pepper your code with console.log, wait for an error, see what happened.

A similar system can process almost anything.

Great for problems you can split into independent tasks like prepping data. Less great for large inter-dependent operations like machine learning.

Architectures for serverless data processing

Serverless data processing works like .map and .reduce at scale. Inspired by Google's infamous MapReduce programming model and used by big data processing frameworks.

Work happens in 3 steps:

- Accept chunks of data

- Map over your data

- Reduce into output format

Say you're building an adder: multiply every number by 2 then sum.