Elements of serverless – lambdas, queues, gateways, and more

Serverless is about combining small elements into a whole. But what are the elements and how do they fit together?

We mentioned lambdas, queues, and a few other in previous chapters – Architecture Principles and Serverless Flavors. Let's see how they work.

Lambda – a cloud function

"Lambda" comes from lambda calculus – a mathematical definition of functional programming that Alonzo Church introduced in the 1930s. It's an alternative to Turing's turing machines. Both describe a system that can solve any solvable problem. Turing machines use iterative step-by-step programming, lambda calculus uses functions-calling-functions programming.

Both are equal in power.

AWS named their cloud functions AWS Lambda. As the platform grew in popularity, the word "lambda" morphed into a generic term for cloud functions. The core building block of serverless computing.

A lambda is a function. In this context, a function running as its own tiny server triggered by an event.

Here's a lambda function that returns "Hello world" in response to an HTTP request.

// src/handler.ts

import { APIGatewayEvent } from "aws-lambda";

export const handler = async (event: APIGatewayEvent) => {

return {

statusCode: 200

body: "Hello world"

}

}

The TypeScript file exports a function called handler. The function accepts an event and returns a response. The AWS Lambda platform handles the rest.

Because this is a user-facing API method, it accepts an AWS API Gateway event and returns an HTTP style response. Status code and body.

Other providers and services have different events and expect different responses. A lambda always follows this pattern 👉 function with an event and a return value.

Considerations with lambda functions

Your functions should follow functional programming principles:

- idempotent – multiple calls with the same inputs produce the same result

- pure – rely on the arguments you're given and nothing else. Your environment does not persist, data in local memory might vanish.

- light on side-effects – you need side-effects to make changes like writing to a database. Make sure those come in the form of calling other functions and services. State inside your lambda does not persist

- do one thing and one thing only – small functions focused on one task are easiest to understand and combine

Small functions work together to produce extraordinary results. Like this example of combining Twilio and AWS Lambda to answer the door.

Creating lambdas

In the open source Serverless Framework, you define lambda functions with serverless.yml like this:

functions:

helloworld:

handler: dist/helloworld.handler

events:

- http:

path: helloworld

method: GET

cors: true

Define a helloworld function and say it maps to the handler method exported from dist/helloworld. We're using a build step for TypeScript – the code is in src/, we run it from dist/.

events lists the triggers that run this function. An HTTP GET request on the path /helloworld in our case.

Other typical triggers include Queues, S3 changes, CloudWatch events, and DynamoDB listeners. At least on AWS.

Queue

Queue is short for message queue – a service built on top of queue, the data structure. Software engineers aren't that inventive with names 🤷♂️

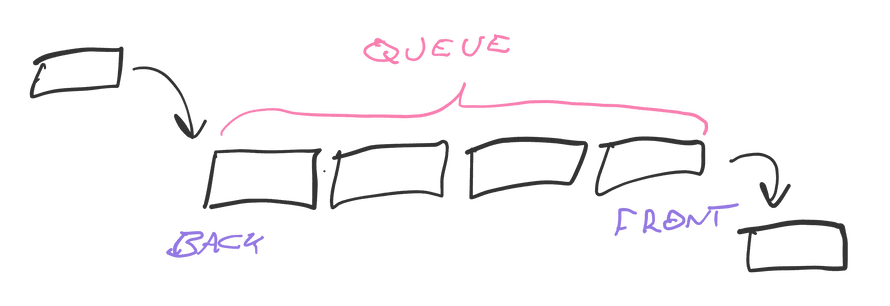

You can think of the queue data structure as a list of items.

enqueing adds items to the back of a queue, dequeing takes them out the front. Items in the middle wait their turn. Like a lunch-time burrito queue. First come first serve, FIFO for short (first in first out).